AI and Machine Learning in Security Systems: From Training to Deployment

GSOCs clear hundreds of false alarms daily, from cameras and motion sensors triggering on weather events to badge readers flooding dashboards with door-ajar notifications. Actual threats blend into overwhelming noise, and operators spend more time chasing false positives than preventing security incidents.

AI in physical security promises relief, but separating platforms that deliver from those that create new problems requires understanding what happens in daily operations. How systems learn determines whether they catch real threats or generate new false alarms. How they connect to existing infrastructure affects costs and downtime. Whether they adapt or need constant tuning decides long-term value.

Security teams need practical ways to assess capabilities, match technical features to operational needs, and define metrics that prove value. This article examines how AI systems learn to recognize threats, prove detection accuracy before deployment, integrate with existing cameras and sensors, and improve after launch.

How Security AI Systems Learn to Recognize Threats

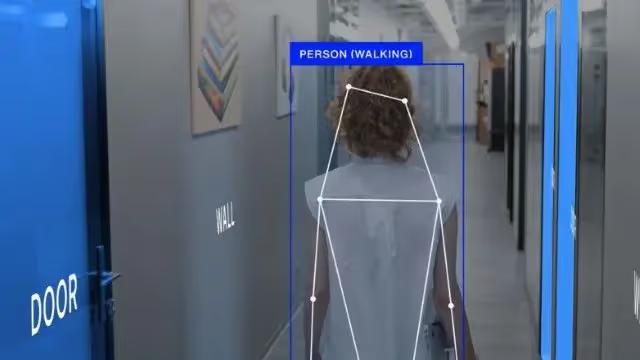

Most security AI platforms are trained on labeled video datasets where operators mark specific events as threats or normal activity. The training process starts with thousands of video clips showing events like lingering near restricted doors, repeated perimeter passes, sudden crowd dispersal, and people taking cover.

The depth and breadth of training data determines platform performance. Real attacks make up a fraction of footage, creating class imbalance problems where systems trained only on actual incidents miss novel tactics or generate false positives. Platforms that train on extensive datasets covering multiple scenarios, pre-incident behavior, lighting conditions, weather variations, and camera angles perform better in production environments.

Traditional gun detection systems are trained to recognize objects that visually resemble weapons within a video frame. When the model identifies such an object, it triggers an alert. This method focuses solely on object presence, ignoring the surrounding context that determines intent or threat level. As a result, these systems can only detect visible weapons—not anticipate incidents before they unfold—and are highly prone to false positives when ordinary objects resemble firearms.

The training approach determines whether platforms filter noise or amplify it. Systems trained on diverse datasets adapt to facility-specific patterns. Those trained on narrow datasets flood consoles with alerts triggered by routine activities.

Testing and Validation Before Live Deployment

Systems trained in controlled environments can still fail in lobbies. Validation requires both structured testing against known datasets and field proof through a pilot.

Controlled testing measures precision and speed, but those metrics predict nothing about cameras on loading docks or feeds on parking structures. For this reason, deployment pilots are critical to provide operational proof. Cameras stream through the platform for weeks while alerts log silently. Operations compares AI verdicts against actual outcomes. Pilots allow ops teams to potentially identify what controlled environments may have miss—storefront glare triggering false alerts, forklift movement flagged as breaches, and badge sharing marked as tailgating.

The metrics that matter are false alarms per camera daily and alert latency. These numbers reveal whether platforms reduce workload or simply redistribute it. Poor performance requires retraining before launch. Validation should target operational priorities, including unauthorized access through Lenel doors, tailgating past HID readers, and perimeter breaches.

Deployment Architecture That Preserves Existing Infrastructure

Validation proves the system works in a pilot. Architecture determines whether it scales across the enterprise without replacing existing infrastructure.

The challenge lies in working with cameras, VMS platforms, and access controllers already in place. Cloud-first designs sound appealing until bandwidth costs spiral from streaming every frame off-site. Pure edge deployments deliver sub-second response but require expensive hardware at every camera location.

Hybrid architecture balances performance and cost. Critical detections happen locally for instant alerts, while encrypted metadata moves to the cloud for investigations and system-wide updates. This approach allows platforms to integrate with existing cameras and major VMS solutions without infrastructure replacement.

Successful deployment requires verifying several factors:

- VMS platforms must provide API access and event forwarding

- Edge locations need adequate processing capacity

- Door controllers must integrate with PACS for badge correlation

- Networks should support TLS-encrypted transmission with failover protocols for outages

Integration with Security Operations Workflows

Deployment architecture determines scalability. Integration with the technology ecosystem determines usability. Closed systems or platforms with limited extensibility that force operators to operate with siloed systems tend to fail. Effective systems interoperate with an extensive set of industry-leading solutions to future-proof investments, offer flexible choice, and seamlessly integrate with existing processes.

For instance, for access control verification, first-line triage happens automatically, combining visual context, badge data, door sensor and threat assessment to determine severity. Through direct integration with the PACS solution of choice, the AI-powered system auto-clears low-severity or false-positive DFO/DHO while flagging actual tailgating events. The outcome is alarm and access control noise reduction without adding operator burden.

Another scenario to consider is investigations. Natural-language search capabilities allow queries like "person carrying a laptop at the front door yesterday afternoon" to return exact clips instantly. Movement tracking across cameras compresses hours of manual video review into minutes.

API-based integrations via webhook endpoints push structured alert data into ticketing systems while honoring escalation paths. Severity labels, camera identifiers, and operator notes map directly to record systems. Integration strengthens the workflows operators already run rather than forcing the adoption of parallel systems.

Model Training and Improvement

Integration connects AI platforms to existing workflows. Model training determines whether platforms deliver low recall and high fidelity detection.

. Extensive ongoing training on real, not synthetic or generic, security camera video that reflect ensures precision, recall, and latency meet standards before deployment.

Training refinements evolve generic signatures into facility-specific detections. A pattern like "person loitering" becomes "graffiti tagging near loading dock" or "masked individual with crowbar near fence line" based on confirmed incidents at specific sites.

Performance Monitoring and Operational Optimization

Training maintains model accuracy, and performance monitoring prevents small issues from becoming operational disruptions. Deployment marks the start of an ongoing management cycle. Systematic tracking covers detection precision, false positives per camera weekly, alert response times, and investigation duration. SOC dashboards surface these metrics in real time so teams can spot trends before operators experience alert fatigue or miss genuine threats.

When false positives spike at specific sites, the cause is often environmental changes rather than core model issues. New lighting, camera vibration, or altered layouts may require threshold adjustments instead of retraining. Detection gaps, however, indicate signature updates are needed—validated in test environments before rollout to production.

Because environmental conditions constantly evolve, accuracy must be maintained through adaptive tuning. Construction projects, facility modifications, and seasonal lighting shifts introduce edge cases that initial training never encountered. Vendors should support this with real-time health alerts, weekly performance summaries, and quarterly briefings that map environmental trends to planned updates.

Intelligent Platform Architecture That Streamlines Security Operations

The false alarms flooding GSOC consoles stem from systems that treat physical security as simple surveillance. The AI security lifecycle works as a continuous loop. Training determines what systems recognize. Validation proves they work in real environments. Deployment preserves existing infrastructure. Integration maintains trusted workflows.. Monitoring catches drift before it affects operations.

Ambient.ai is the leader in Agentic Physical Security. Ambient is an AI-powered platform that integrates agentic monitoring, investigation, access intelligence and threat assessment and response capabilities in a single solution. Ambient is powered by Ambient Intelligence, a breakthrough AI engine that combines advanced computer vision with the industry-first reasoning Vision-Language Models for physical security (Ambient Pulsar). Ambient seamlessly connects with existing cameras, sensors, and access systems, unifying them into a centralized intelligence layer that augments SOC operators.

Three core capabilities power this transformation.

- Ambient Foundation provides a centralized single pane-of-glass view of all video feeds, record-and-retrieve capabilities, and full visibility into system health and security KPIs. Dynamic AI video wall surface the most relevant feeds, while multi-site management allows operators to oversee global assets from one interface. Built-in sensor health monitoring prevents blind spots, and Ops Insights deliver analytics on operator performance and incident response.

- Ambient Access Intelligence achieves over 95% false alarm reduction from access control systems by connecting PACS data with live camera feeds. This correlation validates every door event automatically, returning hundreds of operator hours to proactive monitoring. Teams resolve door-forced-open events and detect tailgating without manual verification.

- Ambient Threat Detection processes video feeds in real time for security incident detection across over 150 predefined events, including brandished firearms, perimeter breaches, tailgating, and unauthorized entry. The platform understands behavior through contextual reasoning rather than simple object detection. When people suddenly run from an area, take cover, or exhibit distress, the system can detect threats even when weapons aren't visible by reading behavioral cues.

- Ambient Advanced Forensics enables 20x faster investigations through natural-language search, finding relevant footage in seconds. Operators query "person in red shirt near loading dock" and receive exact clips instantly, compressing days of manual review into minutes.

The platform integrates seamlessly with Genetec, Milestone, Lenel, Axis, and other existing systems without hardware replacement. Trusted by Fortune 100 companies to protect campuses, data centers, and critical infrastructure, Ambient.ai transforms security from a cost center focused on reaction into a strategic asset designed for prevention.

Security teams that apply this evaluation framework separate platforms that reduce operator workload from those that simply redistribute alert fatigue. Schedule your demo to see how Ambient.ai delivers measurable improvements across training, deployment, and ongoing operations.

Key Takeaways

- Training data quality determines real-world performance. Platforms trained on diverse datasets covering multiple scenarios, lighting conditions, and camera angles filter noise effectively, while those trained on narrow datasets flood consoles with false alerts triggered by routine activity.

- Validation requires operational proof, not just controlled testing. Pilot deployments where cameras stream through the platform for weeks reveal issues that lab environments miss, with false alarms per camera daily and alert latency as the metrics that matter most.

- Hybrid architecture balances performance and cost. Edge-based processing delivers instant alerts for critical detections while cloud-based analytics support investigations and system-wide updates, all without replacing existing cameras, VMS, or access control infrastructure.

- Integration determines usability. Platforms that interoperate with existing PACS, VMS, and ticketing systems strengthen workflows operators already run, while closed systems force parallel processes that reduce adoption and effectiveness.

- AI deployment is the start of ongoing management, not the finish line. Environmental changes like new lighting, camera movement, or facility modifications require continuous monitoring and adaptive tuning to maintain detection accuracy over time.

.webp)